What is computerized adaptive testing?

Computerized adaptive testing (CAT) is a type of testing where the difficulty of the questions changes to match the ability level of the person taking the test. When a person gets a question wrong, the next question is easier. Conversely, when a person gets a question right, the next question is harder. This is different from fixed-form tests where everyone taking the test gets the same questions.

What’s wrong with normal tests?

There is nothing inherently “wrong” with fixed-form tests, but they do have several drawbacks. The main drawback—and the one that CAT aims to address—is that fixed-form tests are only really effective at discriminating between average-ability test-takers. Fixed-form tests are less good at discriminating between people at the extremes; there will be people for whom the test is too hard (floor effects) and people for whom the test is too easy (ceiling effects). Ceiling effects, especially, are a big concern.

Why is it so important to differentiate between people?

Well, differentiating between people is the entire point of testing. There are two main reasons that we care about making fine-grained distinctions.

One reason is that it helps us to tailor the education a person receives to match their ability. For example, we might be interested in whether a precocious student is a good candidate for skipping ahead a grade level. And a well-designed test not only provides feedback on someone’s overall ability level, but will be able to pinpoint the specific areas that they need to work on in order to improve.

The other reason why we want to differentiate is to help determine how best to allocate scarce resources. Consider the case of college admissions and the SAT. We would like to differentiate between average people (to determine who is college-ready and who isn’t), but we also would like to differentiate between people at the high-end (to determine who would be most likely to flourish as a Mechanical Engineering major at MIT). Current tests for college-admissions struggle at differentiating between high-ability students. They are simply too easy.

If you are worried that the SAT is too easy for some people, why not just add more hard questions?

You can do that, but there is a trade-off: the more questions you add, the longer the test becomes. The 2016 version of the SAT was already three-hours long. As a test grows longer, factors like physical stamina and executive functioning can increasingly influence performance—which is not desirable for an intelligence test.

Why not just have one test that is easy and one test that is hard? Then have people choose which one to take?

That is indeed an option. When the SAT used to have Subject Tests, they offered a Math I test and a Math II test. Math I covered algebra, geometry, and trigonometry while Math II was more advanced, requiring pre-calculus. But this raised the question of how to compare scores between the two tests, since they covered different content and had different difficulties. Is a 750 on the Math I test as impressive as a 600 on the Math II test? It's hard to say. By using CAT, you put everyone on the same scale.

Can't you just have a table that converts scores between the easy test and the hard test?

While a conversion table might seem like a simple solution, it would be subject to significant uncertainty. Additionally, there's the issue of what happens when someone takes a test that isn’t appropriate for their ability level.

Imagine that there’s a farmer boy from Havre, Montana who ditches class every afternoon to race tractors with his brothers. He has no idea that he’s Ramanujan in denim overalls. On a lark, he takes the easy test and aces it. With a perfect score on the easy test, you have successfully put a lower-bound on his ability. But you still don’t know how he might have performed on the harder test.

And CAT fixes that?

Yes! With CAT, not only could you keep standardized tests at their current length and be able to differentiate across the entirety of the ability spectrum, but you could make the tests significantly shorter. Even people of average ability currently have to suffer through far too many questions that are either too easy or too hard. “CATs can reduce testing time by more than 50 percent while maintaining the same level of reliability.”

So how does CAT work exactly?

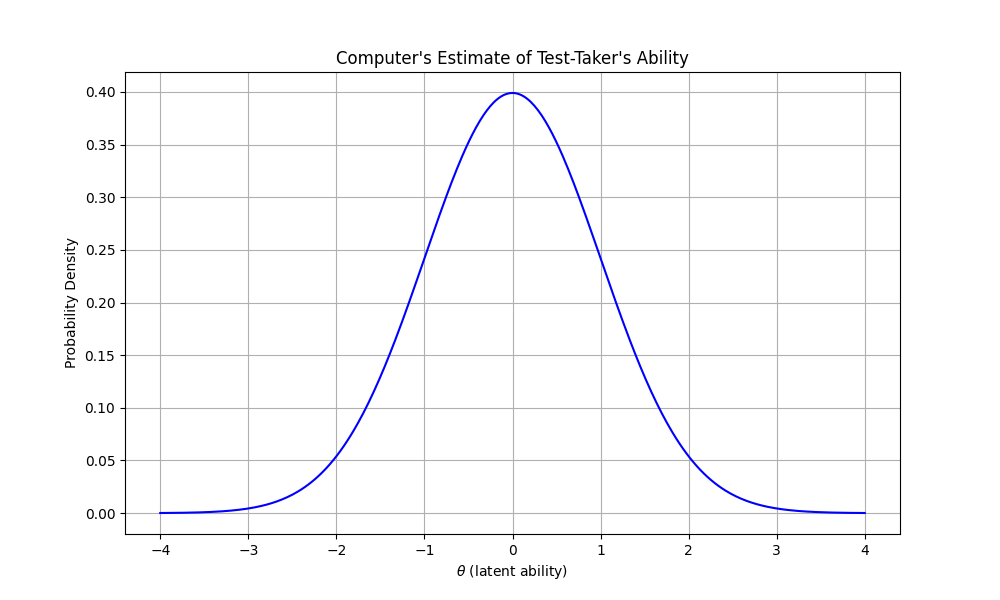

During the test, the computer has a probability distribution over the test-taker’s ability. The width of this probability distribution represents the computer’s uncertainty in its estimate.

CAT selects the next question to maximize informativeness. The informativeness of a question depends on how well it reduces the uncertainty of the computer's estimate of the test-taker's ability.

It turns out that questions are most informative when they are neither too hard nor too easy for the test-taker, given the computer's current estimate of their ability.

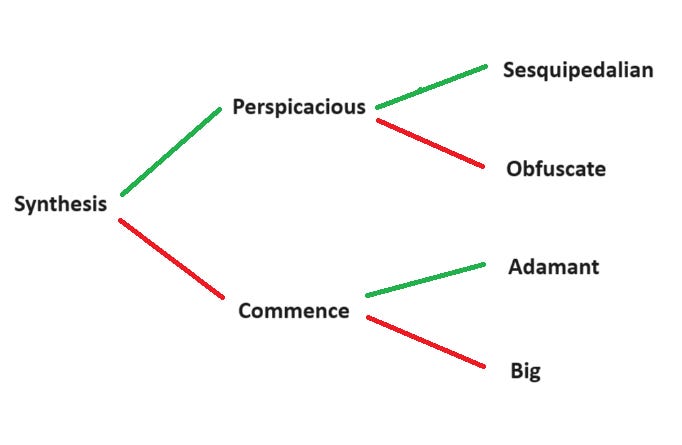

Imagine you are using CAT to estimate someone's vocabulary size. You would first start with a word of average difficulty. Based on their response, you select an easier or harder vocab word. This process continues, with each word chosen according to the computer's updated estimate of the test-taker's ability, until a precise estimate is achieved.

But that seems unfair. The better you do, the harder the questions you have to answer. Wouldn’t that cause some people to get lower scores than they should?

In a fixed-form test, we can estimate someone’s ability by counting the total number of correct responses. (Though even for fixed-form tests, there are better ways to estimate a test-taker’s ability.) In CAT, we can’t estimate ability by counting the number of correct responses. In fact, in a well-designed CAT, every test-taker should answer approximately 50% of the questions correctly, regardless of their ability level.

If the goal is for the test-taker to be answering roughly half the questions they receive, how do we know what score to give someone?

Each item in the testing pool has a difficulty rating. Glossing over the technical details, a person’s score on the test roughly corresponds to the estimated item difficulty at which they have a 50-50 shot of answering the question correctly.

What if I miss a question on purpose so that I get easier questions?

That won’t work. Remember: maximizing the computer’s best estimate of your ability is not the same as answering the most number of questions correctly. To get a high score, you need to correctly answer a lot of difficult questions. If you punt on the initial questions, you won’t get shown the hard questions that really juice one’s score.

How does the computer update its estimate of the test-taker’s ability level over time?

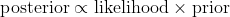

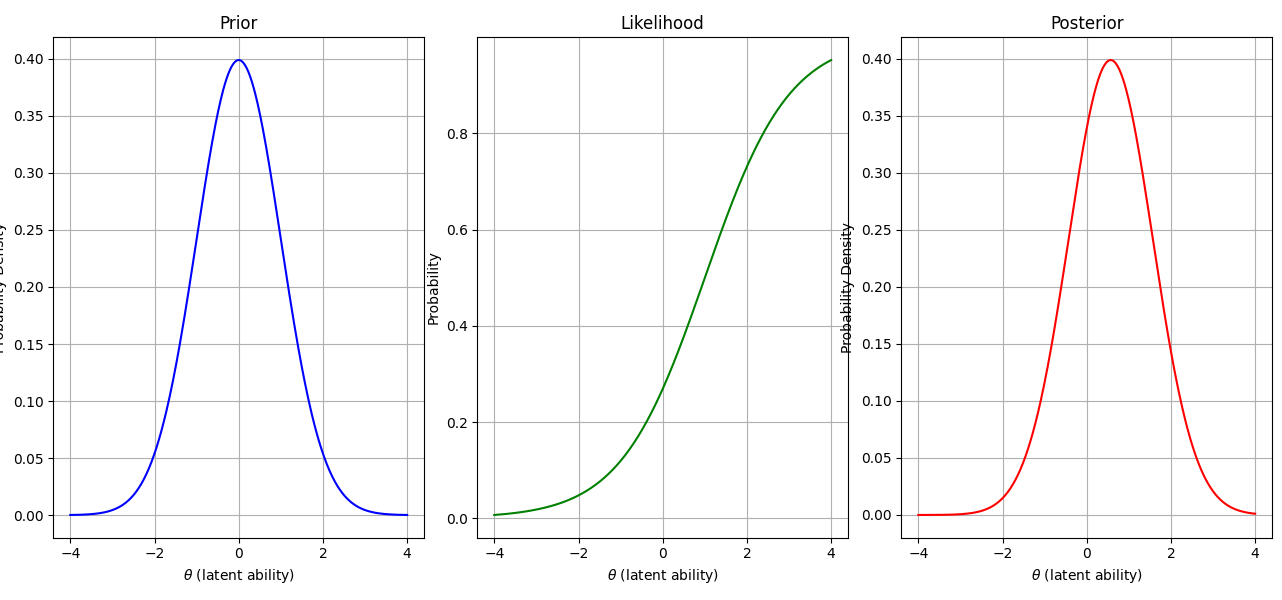

In Bayesian statistics, we have that the posterior is proportional to the likelihood multiplied by the prior.

Can you try that again but in English?

Bayesian statistics is a way to formalize how beliefs change over time when encountering evidence.

You start with some beliefs which are encoded in the prior, which is a probability distribution over possible outcomes. Then, when you encounter a piece of evidence, it is encoded in the likelihood, where outcomes that are more likely to generate that piece of evidence have higher likelihoods. You then combine the prior and the likelihood to get the posterior. Intuitively, if you have a high posterior probability for an outcome, that is either due to (a) you already had a high prior probability, or (b) the evidence is much more relatively likely to occur with that outcome compared to other outcomes.

In our case, the possible outcomes are the different latent ability levels that the test-taker could have.

The prior is the computer's current estimate of the test-taker's ability.

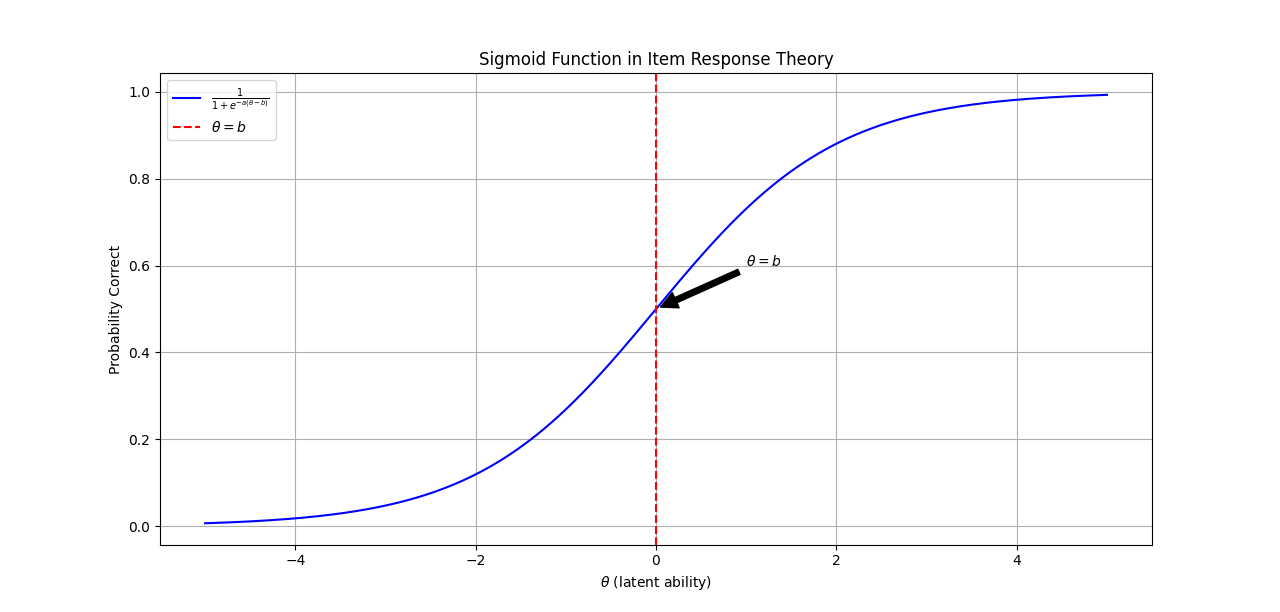

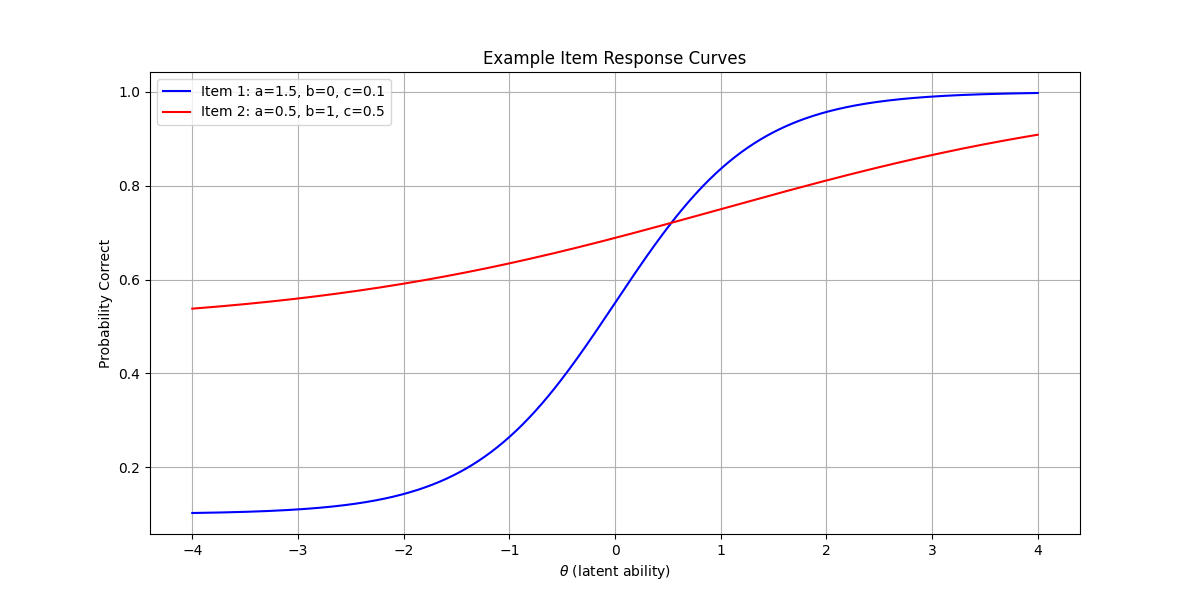

The likelihood is given by the item response curve. The item response curve tells you the probability of answering the question correctly as a function of the test-taker's ability.

The posterior is the updated best estimate of the test-taker's ability, incorporating the new information learned from whether they answered the previous question correctly or incorrectly. To obtain the posterior, you multiply the prior by the likelihood and then normalize the result.

The posterior is then used as the prior for the next question.

Given the shape of our item response curves (our likelihoods), each time you update, two things happen:

You shift your distribution right or left depending on whether the test-taker got the question correct or incorrect.

You also reduce your uncertainty by narrowing the width of your distribution.

If you want a single-number estimate of the test-taker’s ability, then you take the ability that corresponds to the peak of your posterior.

In the graph of the item response curve, you have that there is practically a zero percent chance for low-ability test-takers to get the question right. But what about guessing?

Good observation. The item response curve I showed was a simplification where the only property of the curve was its difficulty. But there are actually three parameters to every item:

a, the discrimination parameter. This tells you the extent to which the item differentiates between high-ability test-takers and low-ability test-takers. It corresponds to the steepness of the item response curve at its inflection point.

b, the difficulty parameter. As its name suggests, it indicates how difficult the question is. It corresponds to the location of the inflection point on the ability scale.

c, the guessing parameter. This tells you the probability of someone with no knowledge of the subject matter getting the question correct by guessing. It corresponds to the horizontal asymptote of the item response curve as ability approaches negative infinity.

What makes for a quality item?

In terms of item parameters, a quality item should have:

High a. A quality item is one with high discrimination: where there is a sharp transition between people who can get the answer right and people who can't get the answer right.

Low c. There should be a low probability of guessing the right answer.

And within the item pool, there should be a diversity of difficulties. The ideal item pool is rectangular, with an equal number of items at every difficulty level.

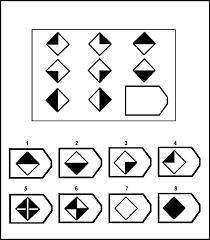

We also care about what is called "face validity". Face validity means that the item looks like it belongs on the test. You can have an item that, from a psychometric point-of-view, has all the desirable properties. But if it doesn't appear to belong, then the test looks bad.

For example, the question shown above is from Raven's Progressive Matrices, a type of IQ test. If you were to include this question on the SAT, it would likely have decent discrimination. However, even though the SAT is essentially an IQ test, it is ostensibly designed to assess college-preparedness and scholastic aptitude. A Raven's Matrices question would seem out of place and have poor face validity on the SAT.

What if I am good at different things? Like what if I am really good at math but bad at reading? I would get hard math questions right, but miss easy reading questions. What then?

This would be an important part of the test-designing process. For CAT to work, you must be able to represent item difficulty one-dimensionally. This assumption is called local independence. Local independence means that once you know someone's latent ability, you have all the information that exists to predict whether they will get a particular item correct. This assumption is tested during the validation process.

If there are clusters of questions that break local independence, you can divide your CAT into subtests. For example, a test with both reading and math questions would break local independence, as some people are better at verbal tasks than math tasks, and vice versa. To address this, standardized tests separate reading and math into different subtests.

When is the test over?

You can have either have a fixed number of questions or you can have a stopping rule. A common stopping rule is that the test stops when the computer’s uncertainty in the test-taker’s ability becomes smaller than some pre-determined value.

This is all cool in theory, but how do we know that it works in practice?

While not as popular as traditional testing, CAT is being used in limited forms in many popular standardized tests. The Graduate Record Examination (GRE) and the Graduate Management Admission Test (GMAT) are both adaptive tests. The Armed Services Vocational Aptitude Battery (ASVAB) also offers a CAT version in addition to a traditional pencil-and-paper version of the test. Even the SAT has recently gone digital and now uses an adaptive format.

However, the GRE, the GMAT, and the SAT don't update the difficulty after every question. Instead, they update the difficulty between sections. In the case of the GRE, each subtest of the exam (math and verbal) is divided into two sections. The first section is of average difficulty, while the second section is adapted in difficulty based on the test-taker's performance in the first section.

So if CAT is so much better than traditional testing, why isn’t it widely adopted?

While CAT has some nice properties, there are several drawbacks associated with it. For one, implementing CAT requires the use of a computer. Though with the rising ubiquity of laptops among students, this is increasingly less of a concern.

The biggest drawback to CAT is that it is expensive to construct the item pool.

Why is constructing the item pool so expensive?

One reason is that CAT needs a much larger pool of questions compared to a traditional fixed-form test. This large item pool is necessary in order to effectively assess test-takers across the full range of abilities. Also, when the test is meant to be taken on a rolling basis, a large item pool helps maintain test security. Even if a test-taker memorizes and shares a specific question, the likelihood of another test-taker encountering that same question is reduced due to the pool's size.

It's also necessary for each test item to be validated individually. Unlike fixed-form tests where small gender differentials in individual items can balance out across the entire test, CAT requires each test item to be unbiased since test-takers may not receive the same set of questions.

Relatedly, there is the problem of information dependencies. Information dependencies, where one question provides hints or answers to another question, can be more challenging to control in a CAT system due to the large number of possible test combinations. In a fixed-form test, these dependencies can be identified and minimized by scanning the finite set of questions.

Developing a CAT suitable for high-ability test-takers presents a unique challenge. To effectively discriminate among the highly-able, CAT requires a large bank of high-difficulty questions that have been validated on a population of high-scorers. This leads to a chicken-and-egg problem: the advantages of CAT for high-ability test-takers depend on the prior existence of an appropriate question bank, but creating this question bank requires access to a large number of high-ability test-takers.

Why are you so obsessed with differentiating between people of high ability?

Currently, high-ability at the high school level is determined by opt-in to high-level competitions like Math Olympiads or science fairs such as the Regeneron Science Talent Search. However, competitions and science fairs both have limitations. Competitions are better at assessing mathematical as opposed to verbal intelligence. Science fairs are heavily biased in favor of students from high socioeconomic backgrounds who have the necessary resources, such as mentorship and lab equipment, to complete a high-level research project.

As a result, if there is an intellectually gifted student with an IQ of 160 who doesn't have access to the right environment, they may not be identified.

But I thought that no one has an IQ of 160?

Whenever you ask yourself a question about IQ, a good way to deconfuse yourself is to instead turn it into an equivalent question about height.

In the US, the average adult male height is 5 feet 9 inches (69 inches) with a standard deviation of 3 inches. So a height four standard deviations above the mean is roughly 6 feet 9 inches (81 inches). That's really rare! But does that mean that no one is taller than 6-foot-9?

Imagine a world exactly like our own except we can't measure people's height directly (maybe rulers are illegal). The best way we have to estimate someone's height is to have them dunk a basketball, many different times in many different ways under many different circumstances. In this world, it would be hard to know for sure that someone was 7 feet tall. Sure, that person is really good at dunking. But what if they are "just" a 6-foot-8 person who can jump really high?

That's the world we live in with respect to IQ.

Okay, but I thought that after a certain point, IQ stops mattering?

That’s a myth.

Because of studies like the Study of Mathematically Precocious Youth (SMPY), we know that there is no diminishing returns to higher levels of cognitive ability.

The SMPY was a longitudinal study that identified talented 13-year-olds by administering the SAT to them. The study then tracked the participants' career trajectories over time. Because the SAT was administered at such a young age, it served as a high-ceiling test that was able to discriminate between ability levels at the far right tail of the distribution. And what was found was that even within the elite sample of the SMPY, the higher scorers were more likely to complete PhDs in STEM fields and more likely to get patents. There is a quantifiable difference between someone with an IQ of 145 versus someone with an IQ of 160.

But aren’t all people with IQs higher than mine on the autism spectrum?

That’s a myth as well.

While it’s hard to get a precise estimate of the correlation, intelligence and social skills are likely to be mildly positively correlated. If you are wondering where are these extremely intelligent people with polished social skills, you might not notice them because (a) smart people with social skills are busy running the world and therefore might not occupy your social circle, and (b) smart people with social skills know how to modulate their intellectual level in order to fit in.

You seem elitist.

That’s not a question.

Why are you so elitist?

I don’t really consider myself “elitist”. But I do consider myself a fan of what you might call the “meritocracy”. If you look at any domain where we can objectively measure performance—like chess or track & field—we see that the very best performers are qualitatively better than even their other world-class competitors. Identifying the best young talent allows us to develop the people who have the greatest potential to make an outstanding contribution to society.

But intelligence isn’t everything. What about conscientiousness? Risk-taking? Creativity? Character?

Those things do matter! If you consider the case of income, for example, studies have found that the correlation between income and intelligence is only around 0.4. Success is invariably the result of many different factors, many of which aren't amenable to measurement by a standardized test.

And that's not even getting into the fact that "success," narrowly defined, is not the only thing that we as a society should value. You should think about computerized adaptive testing as just one tool out of many for helping to identify talent.

"The technocapital sorting machine would start early—well before birth. In this society, every person has a social status score: a composite index based on both their genetic potential and their actualized achievement. When someone wants to reproduce, they have to fill out an application with the Department of Family Planning (DFP). The DFP matches them with a genetically-compatible person of a similar social status score. After multiple rounds of polygenic screening and a few low-risk CRISPR edits, the fetus gestates in the uterus of a genetically-modified cow. When the baby is born, it’s ripped away from its parents and sent to a dedicated nursery where extensive biometrical and psychometric tests are performed daily to determine the child’s rightful place in society."

At least you asked if it was supposed to be funny!